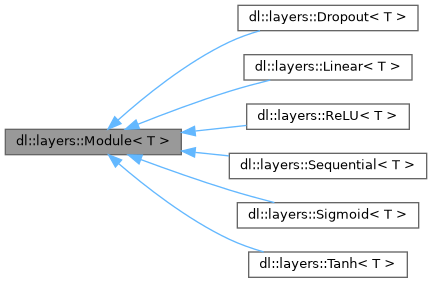

Base class for all neural network modules (PyTorch-like nn.Module) More...

#include <layers.hpp>

Public Member Functions | |

| virtual | ~Module ()=default |

| virtual Variable< T > | forward (const Variable< T > &input)=0 |

| Forward pass through the module. | |

| virtual std::vector< Variable< T > * > | parameters ()=0 |

| Get all parameters of this module. | |

| virtual void | zero_grad () |

| Zero gradients of all parameters. | |

| virtual void | train (bool training=true) |

| Set training mode. | |

| virtual void | eval () |

| Set evaluation mode. | |

| bool | is_training () const |

| Check if module is in training mode. | |

Protected Attributes | |

| bool | training_ = true |

Detailed Description

class dl::layers::Module< T >

Base class for all neural network modules (PyTorch-like nn.Module)

Definition at line 28 of file layers.hpp.

Constructor & Destructor Documentation

◆ ~Module()

|

virtualdefault |

Member Function Documentation

◆ eval()

|

inlinevirtual |

Set evaluation mode.

Definition at line 65 of file layers.hpp.

◆ forward()

|

pure virtual |

Forward pass through the module.

- Parameters

-

input Input variable

- Returns

- Output variable

Implemented in dl::layers::Linear< T >, dl::layers::ReLU< T >, dl::layers::Sigmoid< T >, dl::layers::Tanh< T >, dl::layers::Dropout< T >, and dl::layers::Sequential< T >.

◆ is_training()

|

inline |

Check if module is in training mode.

Definition at line 72 of file layers.hpp.

◆ parameters()

|

pure virtual |

Get all parameters of this module.

- Returns

- Vector of parameter variables

Implemented in dl::layers::Linear< T >, dl::layers::ReLU< T >, dl::layers::Sigmoid< T >, dl::layers::Tanh< T >, dl::layers::Dropout< T >, and dl::layers::Sequential< T >.

◆ train()

|

inlinevirtual |

Set training mode.

- Parameters

-

training Whether in training mode

Reimplemented in dl::layers::Sequential< T >.

Definition at line 58 of file layers.hpp.

◆ zero_grad()

|

inlinevirtual |

Zero gradients of all parameters.

Reimplemented in dl::layers::Sequential< T >.

Definition at line 48 of file layers.hpp.

Member Data Documentation

◆ training_

|

protected |

Definition at line 75 of file layers.hpp.

The documentation for this class was generated from the following file:

- include/neural_network/layers.hpp