Adam optimizer with autograd support. More...

#include <optimizers.hpp>

Public Member Functions | |

| Adam (std::vector< Variable< T > * > parameters, T lr=1e-3, T beta1=0.9, T beta2=0.999, T eps=1e-8, T weight_decay=0.0) | |

| Constructor. | |

| void | step () override |

| Perform one Adam step. | |

| T | get_lr () const override |

| Get learning rate. | |

| void | set_lr (T lr) override |

| Set learning rate. | |

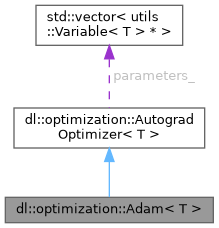

Public Member Functions inherited from dl::optimization::AutogradOptimizer< T > Public Member Functions inherited from dl::optimization::AutogradOptimizer< T > | |

| AutogradOptimizer (std::vector< Variable< T > * > parameters) | |

| Constructor. | |

| virtual | ~AutogradOptimizer ()=default |

| virtual void | zero_grad () |

| Zero gradients of all parameters. | |

Additional Inherited Members | |

Protected Attributes inherited from dl::optimization::AutogradOptimizer< T > Protected Attributes inherited from dl::optimization::AutogradOptimizer< T > | |

| std::vector< Variable< T > * > | parameters_ |

Detailed Description

class dl::optimization::Adam< T >

Adam optimizer with autograd support.

Adaptive learning rate optimizer that computes individual learning rates for different parameters from estimates of first and second moments.

Paper: "Adam: A Method for Stochastic Optimization" (Kingma & Ba, 2014)

Definition at line 131 of file optimizers.hpp.

Constructor & Destructor Documentation

◆ Adam()

| dl::optimization::Adam< T >::Adam | ( | std::vector< Variable< T > * > | parameters, |

| T | lr = 1e-3, |

||

| T | beta1 = 0.9, |

||

| T | beta2 = 0.999, |

||

| T | eps = 1e-8, |

||

| T | weight_decay = 0.0 |

||

| ) |

Constructor.

- Parameters

-

parameters Parameters to optimize lr Learning rate (default: 1e-3) beta1 Coefficient for first moment estimate (default: 0.9) beta2 Coefficient for second moment estimate (default: 0.999) eps Term for numerical stability (default: 1e-8) weight_decay Weight decay (L2 penalty) (default: 0)

Definition at line 45 of file optimizers.cpp.

Member Function Documentation

◆ get_lr()

|

inlineoverridevirtual |

Get learning rate.

Implements dl::optimization::AutogradOptimizer< T >.

Definition at line 157 of file optimizers.hpp.

◆ set_lr()

|

inlineoverridevirtual |

Set learning rate.

Implements dl::optimization::AutogradOptimizer< T >.

Definition at line 162 of file optimizers.hpp.

◆ step()

|

overridevirtual |

Perform one Adam step.

Implements dl::optimization::AutogradOptimizer< T >.

Definition at line 71 of file optimizers.cpp.

The documentation for this class was generated from the following files:

- include/optimization/optimizers.hpp

- src/optimization/optimizers.cpp