Loading...

Searching...

No Matches

layers.hpp File Reference

PyTorch-like neural network layers with automatic differentiation. More...

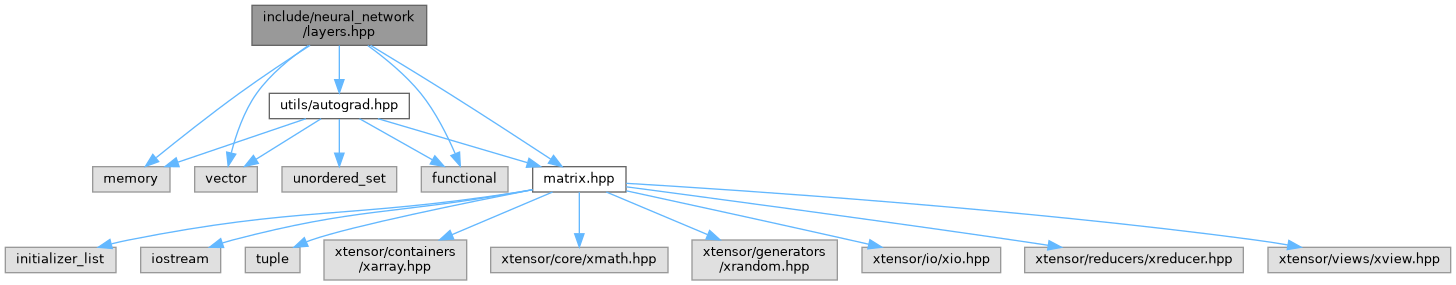

#include <memory>#include <vector>#include <functional>#include "utils/autograd.hpp"#include "utils/matrix.hpp"

Include dependency graph for layers.hpp:

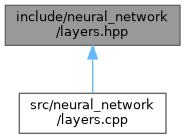

This graph shows which files directly or indirectly include this file:

Go to the source code of this file.

Classes | |

| class | dl::layers::Module< T > |

| Base class for all neural network modules (PyTorch-like nn.Module) More... | |

| class | dl::layers::Linear< T > |

| Linear (fully connected) layer: y = xW^T + b. More... | |

| class | dl::layers::ReLU< T > |

| ReLU activation function. More... | |

| class | dl::layers::Sigmoid< T > |

| Sigmoid activation function. More... | |

| class | dl::layers::Tanh< T > |

| Tanh activation function. More... | |

| class | dl::layers::Dropout< T > |

| Dropout layer for regularization. More... | |

| class | dl::layers::Sequential< T > |

| Sequential container for chaining modules. More... | |

Namespaces | |

| namespace | dl |

| namespace | dl::layers |

Typedefs | |

| using | dl::layers::LinearD = Linear< double > |

| using | dl::layers::LinearF = Linear< float > |

| using | dl::layers::ReLUD = ReLU< double > |

| using | dl::layers::ReLUF = ReLU< float > |

| using | dl::layers::SigmoidD = Sigmoid< double > |

| using | dl::layers::SigmoidF = Sigmoid< float > |

| using | dl::layers::TanhD = Tanh< double > |

| using | dl::layers::TanhF = Tanh< float > |

| using | dl::layers::DropoutD = Dropout< double > |

| using | dl::layers::DropoutF = Dropout< float > |

| using | dl::layers::SequentialD = Sequential< double > |

| using | dl::layers::SequentialF = Sequential< float > |

Detailed Description

PyTorch-like neural network layers with automatic differentiation.

- Version

- 1.0.0

Definition in file layers.hpp.