Loading...

Searching...

No Matches

optimizers.hpp File Reference

PyTorch-like optimizers with automatic differentiation support. More...

#include <memory>#include <vector>#include <unordered_map>#include "utils/autograd.hpp"#include "utils/matrix.hpp"

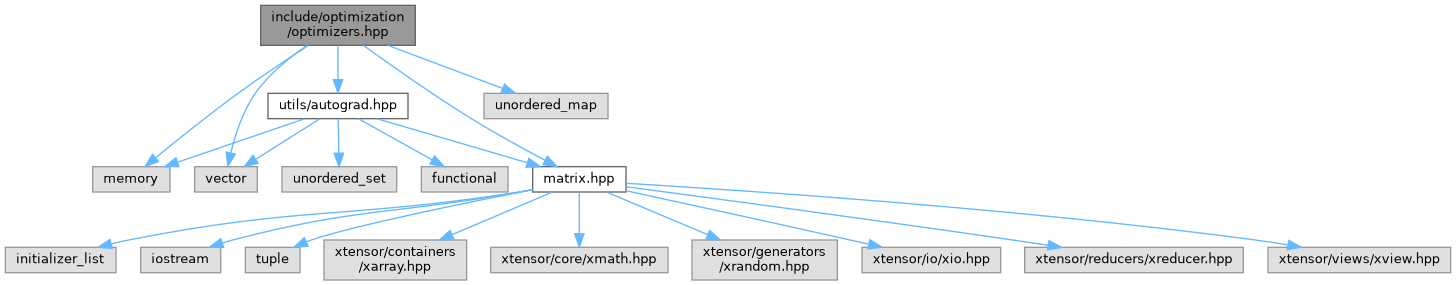

Include dependency graph for optimizers.hpp:

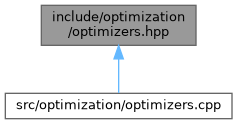

This graph shows which files directly or indirectly include this file:

Go to the source code of this file.

Classes | |

| class | dl::optimization::AutogradOptimizer< T > |

| Base class for autograd-compatible optimizers. More... | |

| class | dl::optimization::SGD< T > |

| Stochastic Gradient Descent optimizer with autograd support. More... | |

| class | dl::optimization::Adam< T > |

| Adam optimizer with autograd support. More... | |

| class | dl::optimization::AdamW< T > |

| AdamW optimizer with autograd support. More... | |

| class | dl::optimization::RMSprop< T > |

| RMSprop optimizer with autograd support. More... | |

| class | dl::optimization::LRScheduler< T > |

| Learning rate scheduler base class. More... | |

| class | dl::optimization::StepLR< T > |

| Step learning rate scheduler Decays learning rate by gamma every step_size epochs. More... | |

Namespaces | |

| namespace | dl |

| namespace | dl::optimization |

Typedefs | |

| using | dl::optimization::SGDD = SGD< double > |

| using | dl::optimization::SGDF = SGD< float > |

| using | dl::optimization::AdamD = Adam< double > |

| using | dl::optimization::AdamF = Adam< float > |

| using | dl::optimization::AdamWD = AdamW< double > |

| using | dl::optimization::AdamWF = AdamW< float > |

| using | dl::optimization::RMSpropD = RMSprop< double > |

| using | dl::optimization::RMSpropF = RMSprop< float > |

| using | dl::optimization::StepLRD = StepLR< double > |

| using | dl::optimization::StepLRF = StepLR< float > |

Detailed Description

PyTorch-like optimizers with automatic differentiation support.

- Version

- 1.0.0

Definition in file optimizers.hpp.